Around the globe, the emergence of generative Artificial Intelligence (AI) has sparked a revolution in the news industry, bringing out excitement and concern among media professionals and the public. The latest report by the Reuters Institute for the Study of Journalism, led by Dr. Richard Fletcher and Prof. Rasmus Kleis Nielsen, delves into public perceptions of AI in news across six countries: Argentina, Denmark, France, Japan, the UK, and the USA. This report paints a complex picture of generative AI’s impact on journalism, revealing its potential and pitfalls.

Around the globe, the emergence of generative Artificial Intelligence (AI) has sparked a revolution in the news industry, bringing out excitement and concern among media professionals and the public. The latest report by the Reuters Institute for the Study of Journalism, led by Dr. Richard Fletcher and Prof. Rasmus Kleis Nielsen, delves into public perceptions of AI in news across six countries: Argentina, Denmark, France, Japan, the UK, and the USA. This report paints a complex picture of generative AI’s impact on journalism, revealing its potential and pitfalls.

The report is based on a survey conducted by YouGov on behalf of the Reuters Institute for the Study of Journalism (RISJ) at the University of Oxford. The data were collected by YouGov using an online questionnaire fielded between 28 March 28 and April 30, 2024 in the six countries. Sample sizes are about 2,000 in each country.

Promise of AI in news

Generative AI, exemplified by tools such as OpenAI’s ChatGPT, Google Gemini, and Microsoft’s Copilot, promises to revolutionise news production and consumption. These tools can generate texts, audio, images, and even videos, offering news organisations unprecedented efficiency and cost savings. The Reuters Institute’s survey highlights that about 66 percent of the public expects AI to have a significant impact on the news media within the next five years, surpassing expectations for its influence on political parties and even science.

The public acknowledges several potential benefits of AI in news. AI can produce content faster and more accurately in terms of basic facts, freeing journalists to focus on in-depth reporting and analysis. AI can also help democratise news production, allowing smaller news outlets to compete with larger organisations by reducing production costs. According to the survey, a significant portion of the population believes that AI can enhance the timeliness and cost-effectiveness of news, making it more accessible to a broader audience.

The public acknowledges several potential benefits of AI in news. AI can produce content faster and more accurately in terms of basic facts, freeing journalists to focus on in-depth reporting and analysis. AI can also help democratise news production, allowing smaller news outlets to compete with larger organisations by reducing production costs. According to the survey, a significant portion of the population believes that AI can enhance the timeliness and cost-effectiveness of news, making it more accessible to a broader audience.

Dark side of AI Journalism

However, the enthusiasm for AI in the news is tempered by substantial concerns. One of the primary apprehensions is the trustworthiness of AI-generated content. The survey reveals that only eight percent of respondents believe that AI-produced news will be more trustworthy than human-produced news. This scepticism stems from fears that AI might perpetuate biases, inaccuracies, and a lack of transparency. Given that AI models are trained on vast datasets, they can inadvertently reinforce existing stereotypes and disseminate misinformation if not carefully monitored.

The public is also wary of AI’s potential to undermine the quality of journalism. While AI can handle routine tasks such as grammar and spell-checking, AI-generated content is significantly uncomfortable in critical areas such as international affairs and politics. Approximately one-third of the respondents believe that news involving AI should be clearly labelled, particularly for tasks like writing articles and data analysis. This demand for transparency reflects a broader concern about eroding journalistic integrity and accountability.

Ethical and social implications

The ethical implications of AI in journalism extend beyond trust and quality. The integration of AI into newsrooms raises questions about job displacement and the future of journalism as a profession. While AI can assist journalists, there is a real danger that it might replace them, leading to job losses and a reduction in the diversity of voices in the media landscape.

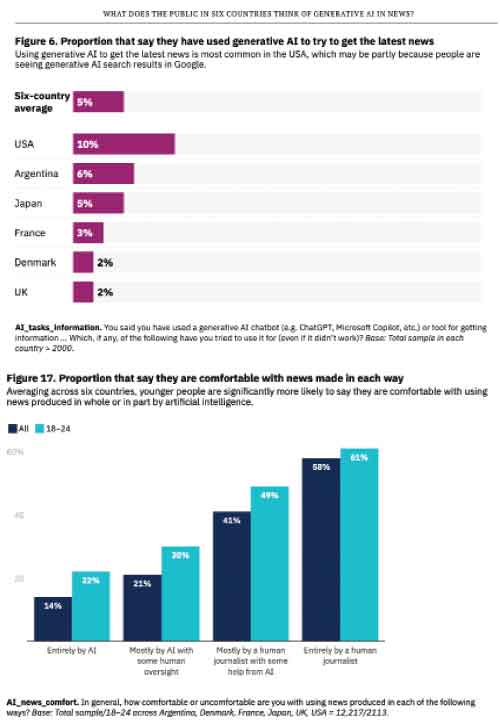

The report indicates that younger people are more optimistic about AI’s potential benefits, yet there is still considerable anxiety about its impact on job security and the overall cost of living.

The environmental impact of AI technologies cannot be ignored. The energy consumption required to train and operate large AI models is substantial, contributing to the carbon footprint of the digital economy. As society grapples with the urgent need to combat climate change, the sustainability of AI-driven journalism must be critically assessed.

The road ahead

As generative AI continues to evolve, its integration into journalism will undoubtedly intensify. The challenge lies in harnessing its potential while mitigating its risks. News organisations must adopt robust ethical standards and transparency measures to ensure that AI complements rather than compromises journalistic integrity. This includes clear labelling of AI-generated content, rigorous fact-checking protocols, and ongoing public engagement to build trust and understanding.

Policymakers and industry leaders must address the broader societal implications of AI in news. This involves creating frameworks that protect jobs, promote diversity, and minimise environmental impacts. By fostering a collaborative approach, where human journalists and AI tools work in tandem, the news industry can navigate the complexities of this technological revolution.

The advent of generative AI in journalism represents a double-edged sword. While it offers promising advancements in efficiency and accessibility, it also poses significant challenges to trust, quality, and ethics. As Sri Lanka and the world at large confront these issues, it is crucial to strike a balance that leverages AI’s strengths while safeguarding the core values of journalism. The future of news depends on our ability to navigate this intricate landscape with wisdom and foresight.

– This article is based on the findings of the Reuters Institute for the Study of Journalism’s report on public perceptions of generative AI in news. The report, authored by Dr. Richard Fletcher and Prof. Rasmus Kleis Nielsen, provides a comprehensive analysis of AI’s impact on the news industry across six countries.

Highlights

*ChatGPT is the most widely recognised generative AI product – around 50 percent of the online population in the six countries surveyed have heard of it. It is also by far the most widely used generative AI tool in the six countries surveyed. Frequent use of ChatGPT is rare, with just one percent using it on a daily basis in Japan, rising to two percent in France and the UK, and seven percent in the USA.

* Many who say they have used generative AI have done so just once or twice, and it has yet to become part of people’s routine internet use.

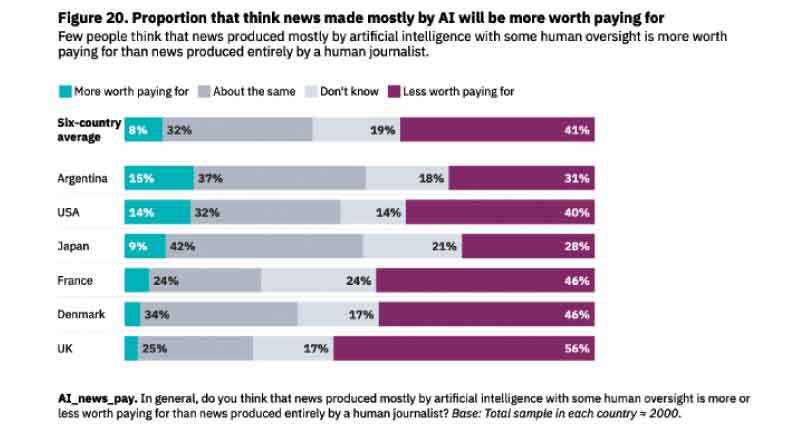

* People tend to expect news produced mostly by AI with some human oversight to be less trustworthy and less transparent, but more up to date and (by a large margin) cheaper for publishers to produce. Very few people (eight percent) think that news produced by AI will be more worth paying for compared to news produced by humans.

* People are generally more comfortable with news produced by journalists than by AI.

* Although people are generally wary, there is somewhat more comfort with using news produced mostly by AI with some human oversight when it comes to soft news topics like fashion and sport than with ‘hard’ news topics, including international affairs and, especially, politics.

* Averaging across six countries around three-quarters of respondents think generative AI will have a large impact on search and social media companies (72 percent), while two-thirds (66 percent) think that it will have a large impact on the news media – strikingly, the same proportion who think it will have a large impact upon the work of scientists (66 percent). Around half think that generative AI will have a large impact on national governments (53 percent) and politicians and political parties (51 percent)